Powered by Pinaki IT Hub – Shaping the Guardians of the Digital Future

Cybersecurity has always been a battlefield of strategy, intelligence, and adaptation. But in today’s world, a new, powerful, and highly deceptive threat has emerged — Deepfakes. These AI-generated videos and audio recordings are so realistic that they can easily mimic anyone’s face, voice, tone, and mannerisms. While deepfakes once seemed like entertainment or harmless experiments, they are now being used in fraud, misinformation campaigns, identity theft, extortion, and corporate manipulation. This blog explores what deepfakes are, how they are created, why they are dangerous, and how ethical hackers and security professionals can defend against them — along with practical steps for individuals and businesses.

What Are Deepfakes and How Do They

Work? (In-depth, point-by-point

explanation)

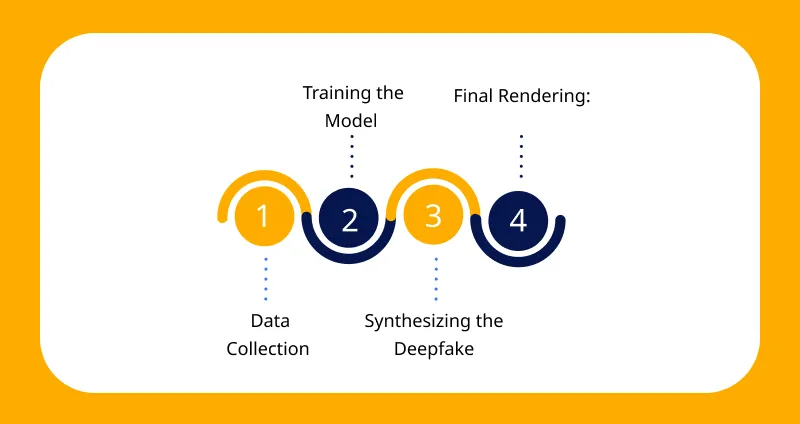

At its core, a deepfake is any piece of digital media — an image, audio clip, or video — that has been synthesized or manipulated by machine learning models so that it appears to show a real person doing or saying something they did not actually do. Deepfakes are distinct from crude photoshops or simple audio edits because they rely on statistical models

that learn a person’s visual and vocal characteristics from data and then reproduce those characteristics in new contexts. The output is often not simply “stitched together” media but a coherent, generative recreation that preserves micro-details of behavior: the micro-expressions, timing, inflections, lighting interactions, and other subtleties that make humans trust what they see and hear. Below we unpack every technological and behavioral building block of deepfakes, why those blocks make the results convincing, and what that implies for detection and defense.

How deepfakes differ from traditional media

manipulation

● Traditional manipulation tools (cut-and-paste, manual rotoscoping, basic audio splicing) require human craft and typically leave visible artifacts — seams, unnatural motion, or inconsistent audio levels.

● Deepfakes are data-driven: rather than a human hand placing a mouth over a face, a model statistically learns the mapping between expressions, sounds, and visual features, then generates new frames or waveforms that are internally consistent

across time.

● Because they are generated by learned models, deepfakes can produce many unique, consistent outputs quickly: multiple video takes, different lighting, or varied speech intonations — all matching the same target persona.

The role of deep learning: why the term “deepfake”

exists

● The “deep” in deepfakes comes from deep learning — neural networks with many layers that can learn hierarchical patterns from raw data.

● Deep learning models move beyond handcrafted rules; they learn feature representations automatically (e.g., the way cheek muscles move when a person smiles) and can generalize those patterns to generate new, believable outputs.

● This enables abstraction: the model doesn’t memorize a single frame, it learns what “smiling” means for an individual and can synthesize that smile in new contexts.

a) Generative AI models: creating new content rather

than copying

● Generative models are optimized to produce data that matches the distribution of the training data. In deepfakes, that means images and audio that are statistically similar to the real person’s media.

● Key behaviors of generative models in this context:

○ Synthesis: generating new frames or audio samples that were not recorded but appear authentic.

○ Interpolation: creating smooth transitions between expressions, head angles, or phonemes that the model interpolates from learned examples.

○ Adaptation: adjusting to new lighting, camera angles, or backgrounds so the generated output fits a target scene.

● Why this matters: a good generative model can convincingly put a public figure into a scene that never happened (speech, interview, courtroom testimony) because it understands — statistically — how that person looks and sounds across many

situations.

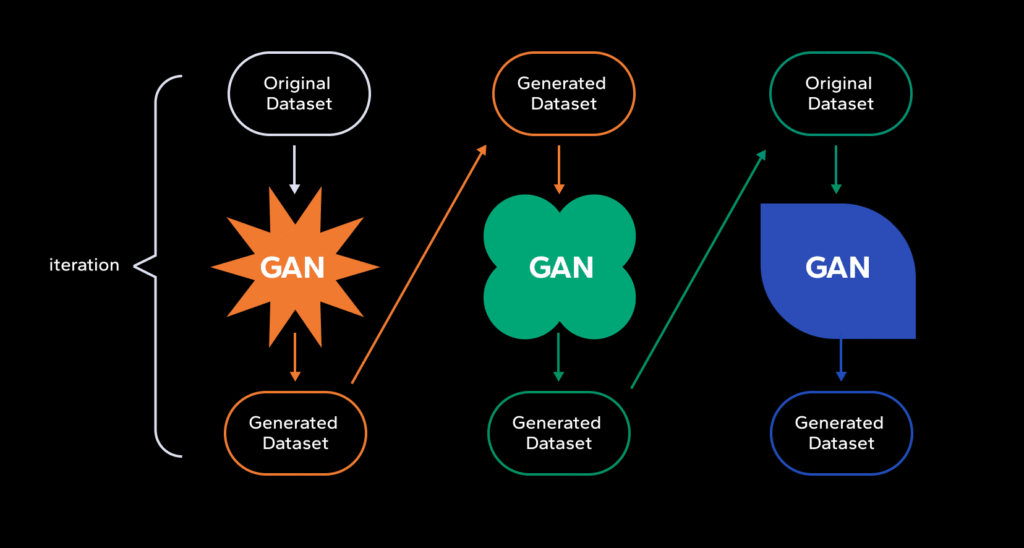

How GANs (Generative Adversarial Networks) produce

realism

● GANs work as a competitive pair:

○ The Generator tries to create synthetic media that looks real.

○ The Discriminator tries to tell generated media from real media.

● Through repeated adversarial training, the generator learns to hide the subtle statistical traces that the discriminator uses to detect fakes.

● Practical consequences:

○ Early GANs produced blurrier images; modern variants (progressive GANs,StyleGAN) produce high-resolution faces with correct textures, pores, and hair detail.

○ The adversarial process pushes the generator to correct micro artifacts (lighting mismatch, unnatural skin texture), producing outputs that pass human scrutiny and evade simple algorithmic checks.

b) Neural networks and machine learning: learning

behavior, not just appearance

● Neural networks used for deepfakes are trained on three complementary streams of data: static images, video sequences, and audio when voice cloning is involved. Each stream teaches different aspects:

○ Static images teach shape, color, texture.

○ Video sequences teach motion, timing, and temporal continuity.

○ Audio teaches prosody, pronunciation patterns, and phoneme-to-mouth-motion correlations.

● Important learned features:

○ Facial landmarks: positions of eyes, nose, mouth relative to face geometry.

○ Temporal dynamics: how expressions change frame-to-frame (for example, the timing of a blink).

○ Idiosyncratic behaviors: specific mannerisms, habitual smiles, throat clearing, speech cadence.

● Why behavior learning is key:

○ Humans judge authenticity by consistent behavior over time. Models that learn behavior can reproduce those consistencies — a powerful reason why modern deepfakes look alive rather than like pasted stills.

Training datasets: quantity, diversity, and quality matter

● The more diverse the training data the model sees (angles, lighting, expressions, ages), the more robust its outputs.

● Public platforms are a rich source: interviews, social media clips, podcasts, and public speeches become training material.

● Small data techniques: With modern approaches, even limited samples (tens of seconds of audio or a few dozen images) can be sufficient for a convincing result due to transfer learning and model pretraining on large, generic datasets.

● Practical implication: Privacy leakage is a core risk — content you post publicly can be repurposed to train a convincing synthetic replica of you.

c) Voice cloning and speech synthesis: the audio threat

● Voice cloning moves beyond simple mimicry of timbre; it models prosody (how pitch and emphasis vary), micro-timing (pauses and inhalations), and commonly used phonetic inflections. Modern systems can:

○ Recreate an emotional tone (anger vs. calm).

○ Imitate the speaker’s rhythm and habitual hesitations.

○ Produce speech in different acoustic environments (adding reverberation to match a particular room).

● How it’s done:

○ Text-to-Speech (TTS) backbones are combined with speaker embeddings that capture a person’s vocal signature.

○ Some approaches use voice conversion: transform one recording to sound like another speaker while preserving the spoken content.

● Security implications:

○ Attackers can generate plausible authorization calls, spoof customer verification steps, or fabricate voice evidence.

○ Short voice samples are increasingly sufficient for convincing clones, lowering the barrier to misuse.

d) Face-swapping and expression mapping: the illusion

of continuity

● Face swapping typically involves two steps:

○ Alignment and mapping: extracting facial landmarks from a reference (target) and mapping them onto the source video frames.

○ Synthesis and blending: generating new face pixels for each target frame and blending them seamlessly into the source frame to match lighting and color.

● Expression mapping uses models that transfer expression parameters (smile, brow raise, speech mouth shapes) from the source actor to the target face in a way that preserves individuality while matching motion.

● Modern systems also correct for:

○ Color grading and temporal coherence: ensuring that generated faces maintain consistent color and lighting across frames so no single frame appears “off”.

○ Physical plausibility: matching shadows and specular highlights, which were earlier giveaways.

● Why these techniques make detection hard:

○ Good blending and temporal smoothing remove the flicker or jitter that earlier fakes exhibited.

○ Micro-expressions and synchronized lip movements reduce suspicion on close viewing.

The learning cycle: why deepfakes improve

continuously

● Iterative model improvement: models trained on more data and on outputs from earlier models learn to replicate and then fix earlier generation mistakes.

● Transfer learning and pretraining: developers start from large, general-purpose generative models and fine-tune them to a specific person, drastically reducing data requirements and training time.

● Community sharing and tooling: open-source frameworks, prebuilt model checkpoints, and user-friendly GUIs democratize access; malicious actors can leverage these same resources.

● Crowdsourcing and feedback: as more deepfakes are produced and analyzed, both creators and detectors learn — an arms race where each side pushes the other to improve.

Why detecting deepfakes has become increasingly

difficult

● Earlier artifacts that made fakes obvious have been systematically addressed by:

○ Modeling blinking and eye movement realistically.

○ Ensuring smooth frame-to-frame transitions and consistent motion blur.

○ Matching lighting direction and cast shadows precisely.

● Newer deepfakes incorporate audio-visual consistency: lip motion correctly matches phonemes and intonation, and the audio signal’s spectral features match the generated voice’s physical production cues.

● Simple forensic markers (missing metadata, inconsistent file headers) are no longer reliable because sophisticated pipelines also forge or preserve metadata and container properties.

● The human perceptual system — which evolved to detect subtle inconsistencies — is being outpaced because the synthetic artifacts become detectably fewer and more subtle.

The psychological dimension: why realistic deepfakes

are dangerous

● People trust faces and voices on a primal level; visual and auditory cues strongly influence credibility judgments.

● Deepfakes exploit that trust to:

○ Persuade victims in targeted scams.

○ Create believable “evidence” in political smear campaigns.

○ Rapidly seed misinformation that normalizes a false narrative.

● Even after exposure, fake media can continue to influence public perception through repetition and partisan echo chambers. The mere existence of a convincing deepfake can seed doubt and erode trust in genuine media.

Practical examples of mechanisms and attack vectors

(short scenarios)

● CEO Voice Fraud: attacker generates a short voice clip of a CEO instructing a subordinate to transfer funds. The subordinate, trusting voice and context, executes payment.

● Phony Emergency from Family: attacker uses a cloned voice of a relative pleading for money under duress. Emotional content combined with perceived authenticity accelerates compliance.

● Political Disinformation: a fabricated short video clip of a candidate saying inflammatory statements is released hours before a debate, shaping social media conversations and news cycles.

● Fake Compliance Evidence: generated video showing an employee making an admission — used to coerce settlements or justify firings. Each scenario demonstrates how deepfakes are effective precisely because they align with human heuristics: temporal continuity, behavioral consistency, and emotional salience.

Technical limits and current telltales (what detectors

still look for)

● Spectral inconsistencies in audio (unnatural high-frequency content or phase artifacts).

● Subtle pixel-level anomalies, especially in challenging regions like hairlines, teeth, and fast motion blur.

● Temporal artifacts under extreme frame stepping (very high frame-rate inspection sometimes reveals stitching errors).

● Inconsistent reflections (eye reflections that don’t match scene lighting).

● Unnatural micro-expressions or micro-movements that are off in timing or amplitude for the individual.

Note: these telltales are shrinking as models improve; what is detectable today may be invisible tomorrow without adaptive detection methods.

The high-level takeaway: deepfakes attack trust, not

just media

● Deepfakes are an existential challenge to mediated communication because they can replicate the social signals that underpin trust: faces, voices, timing, and emotion.

● The technology is neutral: it enables creative uses (film dubbing, visual effects, accessibility tools) but also introduces misuse risks at scale.

● Defending against deepfakes requires a combination of technical detection, process design (verification workflows), and public awareness — because technology alone cannot completely eliminate the problem.

Practical advice summary (concise, actionable)

● Assume public media can be used to train generative models — be cautious about what you post publicly.

● Treat unexpected audio/video requests for action (especially financial) as high-risk; verify via an independent channel.

● Organizations should incorporate multi-factor verification and avoid single-channel trust decisions (e.g., “the boss called” isn’t enough).

● Invest in combined audio-visual detection and human analytic workflows — detection AI plus trained analysts remains the best defense.

2.Why Deepfakes Are a Major Cybersecurity Threat

(Full, Deeply Elaborated Version) Deepfakes are not just visual tricks — they are psychological weapons. They attack the

foundational idea that seeing and hearing someone is proof of authenticity. Human beings trust faces, voices, and emotional tone far more than written text or digital signatures. Deepfakes exploit this deeply-rooted cognitive bias. When artificial intelligence can make a person appear to say or do something they never did, reality becomes negotiable — and this is where the threat becomes dangerous. The consequences extend from private individuals to corporations, law enforcement, and entire governments. Once the boundary between real and fake collapses, society loses its shared truth — and trust becomes fragile. Below are the deeply detailed reasons why deepfakes have become one of the most serious cyber threats of our time:

- Financial Fraud and Corporate Scams

How Corporate Trust Turns Into a Security Weakness In any company, authority structures are clear. Employees are trained to follow instructions from senior leaders — especially CEOs, CFOs, Directors, and Department Heads.

Deepfake attackers exploit this culture of obedience. How These Attacks Happen - Criminals first collect publicly available recordings of an executive from:

○ YouTube interviews

○ Webinars and corporate events

○ Media coverage

○ Social networking posts - AI voice-cloning tools analyze these recordings to capture:

○ The person’s accent

○ Speaking rhythm

○ Breathing pattern

○ Emotional toneThe attacker then makes a phone call or video message, sounding exactly like the executive.

- They issue urgent instructions such as:

“This payment must be processed immediately. I will explain later.” Because urgency and authority are familiar communication patterns, employees don’t question the request.

Why This Is So Dangerous

● No password was hacked.

● No server was breached.

● No malware was installed.

The attack bypasses technology completely and manipulates human judgment. It shifts cybercrime from hacking computers to hacking trust.

2.Identity Theft and Bypassing Biometric Security

Your Face and Voice Are Becoming Your Password — But They Are Now Replicable Many institutions now rely on biometric authentication:

● Face recognition (KYC)

● Voice-based verification for banking

● ID verification through video calls

● Digital onboarding for jobs or financial services Deepfakes now mimic:

● Facial muscle movement

● Natural blinking patterns

● Lip synchronization during speech

● Emotional tone in voice

This allows attackers to pass identity checks that were once considered extremely secure.

What Can Happen

● New bank accounts opened in your name

● SIM cards issued using your digital identity

● Fraudulent loan approvals

● Unauthorized access to your current financial accounts

Identity theft is no longer about stealing numbers and documents. It is about stealing your entire digital existence.

This means:

● Your identity can be used to commit crimes.

● You may be blamed for actions you never took.

● Legal and emotional recovery may take years.

3.Political Manipulation and Public Opinion Influence

Deepfakes Can Reshape Nations Without a Single Bullet Fired Politics relies heavily on perception. Leaders use speeches, interviews, and media interactions to shape beliefs and build trust. Deepfakes have the power to distort this

process dramatically. Imagine These Scenarios

A fake video appears showing:

● A president declaring military action

● A prime minister insulting a religious or minority community

● A political candidate accepting bribes

● A leader discouraging voting

Before the truth is verified, the public reacts. By the time fact-checkers intervene, emotions are already activated — and emotional reactions are extremely hard to reverse.

The Psychological Damage Even after official clarification:

● A portion of the population continues to believe the fake

● Trust in media and institutions declines

● Society becomes divided and polarized

Deepfakes therefore do not just spread misinformation — they destroy the possibility of shared truth.

This leads to:

● Social unrest

● Political instability

● Long-term distrust in government

4.Blackmail, Emotional Manipulation & Reputation Damage

When Deepfakes Hit Personal Lives Deepfake technology can place someone’s face into:

● Explicit content

● Controversial statements

● Criminal activity simulations

Even if the video is fake:

● The emotional damage is real

● The social consequences are real

● The psychological trauma is real

Common Pattern of Abuse

- A fake video is generated.

- The victim receives threats:

“Pay or we will send this to your family, school, office, or social media.” - Fear, shame, shock, and panic drive compliance.

This type of attack targets:

● Teenagers

● Women

● Professionals

● Celebrities

● Regular social media users

Anyone with photos online can become a target.

This is digital extortion, enhanced by AI.

5.Social Engineering Through Fake Video Calls

The Most Dangerous Form of Deception: Real-Time Deepfake Avatars Advanced models can now generate real-time deepfake faces and voices for live video calls.

Which means:

● A video call no longer proves identity.

Possible Exploitation Scenarios

● A fake HR manager conducts an interview to collect personal data.

● A fake family member video calls asking for emergency money.

● A fake tech support agent gains access to secure accounts.

Because the sight and voice seem real, victims do not feel suspicious.

This is the highest form of psychological manipulation

— replacing reality with a believable illusion.

The Core Insight

Deepfakes are not dangerous because they exist. They are dangerous because they erode the concept of truth itself.

Once people stop trusting:

● What they hear

● What they see

● What they emotionally respond to

then society loses the ability to make decisions based on facts. Deepfakes do not just attack individuals. They attack the foundation of trust that keeps society functioning.

3.Real Incidents That Prove the Danger

When Technology Deceives — and People Pay the Price The rise of deepfake technology has shifted the boundaries between truth and illusion. What once required Hollywood-level editing is now achievable by anyone with a decent

computer and the right software. While many still see deepfakes as harmless tools for fun videos or entertainment, real-world incidents have already proven how dangerous — and costly — they can be. Let’s explore a few alarming examples that demonstrate how deepfakes are being weaponized to manipulate minds, steal money, and erode trust globally.

In one of the most shocking corporate frauds in recent years, a multinational energy firm lost over $240,000 because of a deepfake voice impersonation.

The scam unfolded like this:

An employee received a phone call that seemed to come directly from his company’s chief executive officer. The voice on the other end spoke with the same accent, tone, and authority as his boss. The “CEO” urgently instructed the employee to transfer funds to a Hungarian supplier to close an important deal. Trusting the familiar voice, the employee complied — only to find out later that the call was entirely synthetic, generated by AI voice-cloning technology.

This incident highlights two crucial truths:

- Human psychology is the weakest link — people trust familiar voices and authority figures.

- Deepfake audio doesn’t need to be perfect; it only needs to sound believable enough under pressure. For organizations, this was a wake-up call. It emphasized the urgent need for multi-step verification before approving financial transactions, especially when instructions come through voice or video communication.

Case 2: The Zelensky “Surrender” Video — Deepfakes in Warfare

Case 2: The Zelensky “Surrender” Video — Deepfakes in Warfare

During the Russia–Ukraine conflict, deepfakes entered the battlefield — not as weapons of destruction, but as tools of psychological warfare. A fake video of Ukrainian President Volodymyr Zelensky began circulating on social media, showing him urging soldiers to lay down their arms and surrender. The video, though poorly made, was convincing enough at a glance to cause confusion and concern among viewers. The video was swiftly debunked by Ukrainian officials, but the damage was already done. It raised a chilling question — “What happens when false information spreads faster than the truth?” This incident demonstrated how deepfakes can influence morale, manipulate public perception, and distort political realities. Even though this particular fake was caught early, future iterations — powered by more advanced AI — could be far harder to detect. Governments worldwide are now grappling with how to defend against digital misinformation campaigns that blend truth and fiction seamlessly.

In India, deepfake technology has created a new breed of scam that preys on public trust in celebrities. Fraudsters have used AI-generated videos of famous actors and influencers seemingly promoting fake investment schemes, crypto apps, and stock platforms. In these videos, the celebrities appear to personally endorse these services, even urging viewers to “invest

quickly before the offer ends.” Thousands of unsuspecting viewers believed these endorsements and transferred money —

only to realize later that the videos were fabricated.

The result:

● Massive financial losses among individuals.

● Damage to celebrity reputations.

● A trust crisis for legitimate brands and influencers.

Indian law enforcement agencies are increasingly dealing with such AI-driven financial crimes, but deepfake detection remains a race against time.

More Than a Tech Problem — A Psychological Weapon

More Than a Tech Problem — A Psychological Weapon

Each of these incidents reveals a sobering reality:

Deepfakes don’t just manipulate pixels — they manipulate people. They exploit our deepest psychological instincts — trust in authority, recognition of familiar faces and voices, and emotional reactions to credible imagery. Unlike typical cyberattacks that target systems, deepfakes target belief. Once that belief is shaken, the consequences ripple through societies, economies, and democracies. These cases underscore that deepfakes are not just a technological challenge, but a

human vulnerability issue.

From these real incidents, here are some clear takeaways:

- Verification is non-negotiable — Whether in business or personal communication, double-check the source of every voice or video before taking action.

- Digital literacy is crucial — People must learn how to identify signs of manipulation, especially in the era of hyper-realistic AI media. Policy and detection tools must evolve — Governments and tech companies need to invest in AI-based detection systems that can flag deepfakes before they go viral.

- Ethical responsibility in AI development — As creators of AI technologies, developers must ensure safeguards and watermarking systems to prevent misuse. The rise of deepfakes marks a turning point in how truth is defined in the digital world. From multinational boardrooms to warzones and social media feeds, AI-generated deception is becoming a real and present danger. If left unchecked, deepfakes could erode public trust to a point where people no longer believe what they see or hear — a world where “seeing is believing” no longer holds true. The incidents of corporate scams, political manipulation, and celebrity impersonation are just the beginning. What we do next — as individuals, organizations, and societies — will determine whether we control this technology or become victims of it.

4.How Ethical Hackers Are Defending Against

Deepfakes

The New Frontline of Digital Truth As deepfakes become more sophisticated, the world needs a new kind of defender — one that can see beyond surface-level deception. Enter the ethical hacker — a professional once known primarily for testing firewalls and uncovering software vulnerabilities, now evolving into a guardian of media authenticity. In today’s digital landscape, where a single fake video can spark panic, move markets, or destroy reputations, ethical hackers have become truth detectives, working to expose digital manipulation and restore public trust.

Traditionally, ethical hackers (or “white-hat hackers”) focused on protecting computer systems, applications, and data from malicious attacks. But with the explosion of AI-generated media, their mission now extends far beyond networks — into the realm of digital truth verification.

Here’s how their roles have expanded in the fight against deepfakes:

- Analyzing Video and Audio for Manipulation Ethical hackers use advanced tools and AI models to detect subtle distortions in videos and voice recordings that may not be visible to the naked eye.

They look for:

- ● Unnatural lip movements or mismatched audio syncing

- ● Inconsistent lighting and shadow placement

- ● Artifacts or pixel distortions left behind by synthetic generation tools

- By identifying these microscopic cues, they can often determine if a piece of media has been

- tampered with — even when it appears flawless to viewers.

- Conducting Digital Forensics to Trace Media Origins One of the key defenses against deepfakes is digital forensics — the process of tracing a media file’s creation, modification, and sharing trail.

Ethical hackers analyze:

● Timestamps and metadata embedded in files

● Source IP addresses to locate the uploader

● File hash signatures that can reveal when and how content was altered

By reconstructing the chain of creation, they can distinguish between authentic media and synthetic fabrications, often uncovering the origin of misinformation campaigns.

3.Training Detection Models to Recognize Synthetic Patterns

Deepfake technology is evolving — and so are the defense systems built to fight it. Ethical hackers collaborate with data scientists to train AI detection models capable of spotting synthetic patterns in both visuals and audio. These models learn from vast datasets of known deepfakes and real content to identify the minute differences between human-made and machine-generated media.

Examples include:

● Convolutional Neural Networks (CNNs) that analyze facial texture patterns

● Recurrent Neural Networks (RNNs) that assess temporal inconsistencies in videos

● Audio fingerprinting algorithms that catch unnatural sound modulation The goal: Stay one step ahead of fake media — and make AI a part of the solution, not just the problem.

4.Monitoring Dark Web Communities

While the open internet shows the effects of deepfakes, the dark web often holds their origins. Ethical hackers actively monitor these underground networks where deepfake tools, datasets, and tutorials are shared or sold. By infiltrating and tracking these spaces, they gather intelligence about new tools or emerging threats before they reach mainstream use.

This proactive monitoring allows cybersecurity teams to prepare defenses early, patch vulnerabilities, and inform law enforcement about upcoming risks.

- Educating Corporate Teams and the Public Perhaps one of the most powerful tools ethical hackers wield is awareness.

They conduct workshops, simulations, and corporate training sessions to teach employeeshow to:

● Verify sources before acting on visual or verbal instructions

● Detect signs of manipulated media in communication channels

● Implement zero-trust verification policies before executing financial or strategic decisions In a world where “seeing is no longer believing,” knowledge is the first line of defense.

Behind the scenes, deepfake detection is a complex blend of human expertise and AI-assisted analysis. Ethical hackers rely on a combination of technical forensics and behavioral science to expose the digital illusions.

Here’s what goes on under the hood:

- Frame-by-Frame Visual Analysis

Every second of a video contains dozens of frames. Ethical hackers dissect videos frame by frame, scanning for inconsistencies such as:

● Unnatural blinking patterns

● Sudden pixel noise or blur

● Slight color mismatches across frames

Even the most advanced deepfake algorithms struggle to maintain perfect consistency across hundreds of frames, and this microscopic inspection often reveals the manipulation.

- Voice Frequency Abnormality Checking

AI-generated voices, while realistic, often lack the natural frequency fluctuations of human speech. Ethical hackers use spectrogram analysis tools to visualize voice frequencies and detectanomalies like:

● Flat modulation patterns (AI tends to produce uniform tones)

● Phase irregularities in speech

● Unnatural breathing pausesThese signs indicate that the voice may have been cloned or synthesized.

3.Facial Micro-Expression Detection

Human faces display micro-expressions — fleeting, involuntary facial movements that reflect genuine emotion.

Deepfake algorithms can mimic broad expressions, but they often fail to replicate these split-second emotional cues.

By using high-speed video analysis, ethical hackers can detect whether a subject’s facial movements align with authentic human emotion or are AI-generated approximations.

- Movement Consistency Analysis

Natural body movements are fluid and coordinated — something AI often struggles to replicate perfectly.

Analysts check for:

● Unnatural blinking or breathing rhythms

● Rigid muscle motion during speech

● Temporal misalignment between head movement and voice toneEven a fraction-of-a-second delay between sound and motion can reveal a forgery

5.Metadata Inspection and Digital Fingerprinting

Every digital file carries metadata — hidden information such as camera model, editing software used, and timestamps.

Ethical hackers analyze this metadata to:

● Spot inconsistencies (e.g., a “recorded” video showing Photoshop or AI-edit timestamps)

● Trace a file’s upload history

● Apply digital fingerprinting methods to compare it with known originals

This technique is particularly useful in verifying celebrity endorsement scams and political deepfakes.

Deepfake detection is not a one-time solution — it’s a never-ending race. As ethical hackers improve their detection systems, deepfake creators are developing smarter AI models capable of bypassing traditional analysis techniques.

This arms race means defenders must continuously:

● Update datasets for AI training

● Collaborate across cybersecurity, media, and law enforcement sectors

● Advocate for regulation and watermarking standards for all AI-generated content

The mission is ongoing — and it’s global.

Ethical hackers now represent the last line of defense between truth and deception. Their expertise ensures that as technology evolves, human trust doesn’t erode completely. From uncovering fake videos of political leaders to protecting corporations from voice-clone scams, ethical hackers are not just securing data — they’re safeguarding reality itself.

5.How Individuals and Businesses Can Protect

Themselves from Deepfake Threats

Introduction

In the digital age, seeing is no longer believing. With the rapid rise of deepfake technology—AI-generated media that can mimic real people’s faces, voices, and gestures—the line between authentic and fake has blurred. From manipulated political speeches to cloned corporate voices, deepfakes have evolved from mere internet curiosities into tools for fraud, disinformation, and identity theft. The question is no longer “Can this happen to me?” but “How do I protect myself or my organization from it?” This article explores practical strategies for both individuals and businesses to stay safe in an era where artificial intelligence can deceive human senses.

For Individuals: Staying Alert in a Deepfake World

- Always Verify Requests Before Acting

Never transfer money, share personal information, or comply with urgent requests made via voice or video calls—especially if they sound suspiciously real. Deepfake audio scams often mimic familiar voices, such as family members or managers, asking for urgent help or confidential data.

Action tip:

● If you receive a strange video or voice message asking for money or sensitive info, verify the request through a separate communication channel—like a phone call or in-person conversation.

- Be Careful with What You Share Online Every photo, video, or audio clip you post adds to the digital “dataset” that can be used to create a deepfake of you. Fraudsters scrape social media platforms to collect such media for impersonation or extortion.

Action tip:

● Keep your privacy settings strict.

● Avoid posting high-resolution selfies or long audio clips unnecessarily.

● Think twice before uploading personal moments to public platforms. - Use Multi-Factor Authentication (MFA) Even if a hacker mimics your voice or face, they can’t bypass an extra security layer. Multi-factor authentication adds a digital shield that requires more than just a password to

access your accounts.Action tip:

● Enable MFA for your banking, social media, and email accounts.

● Prefer app-based authenticators (like Google Authenticator or Microsoft Authenticator) over SMS-based ones for extra safety.

4.Stay Educated and Informed

The best defense against deepfakes is awareness. The more you understand how AI-driven deception works, the better you can spot red flags.

Action tip:

● Follow credible cybersecurity sources, tech news, and government advisories.

● Watch for visual inconsistencies in videos (blinking patterns, lighting mismatches, or distorted lips).

● If something feels “too real” or emotionally manipulative—pause and investigate.

For Businesses: Building Deepfake Resilience

Deepfake attacks on companies are no longer hypothetical. Scammers have successfully used AI-cloned executive voices to authorize fund transfers worth millions. Businesses must adopt both technological and human-centered defenses.

- Implement a Zero-Trust Verification Policy Traditional verification based on trust (“It sounds like the CEO, so it must be true”) no longer works. A Zero-Trust Policy assumes every request, even from known identities, must be verified

through secure channels before action is taken.Action tip:

● Use code words or secondary confirmation systems for high-value decisions.

● Never rely solely on voice, video, or email for authorization. - Train Employees to Recognize Red Flags Employees are the first line of defense—and often the weakest link if not trained properly. Regular training sessions on recognizing social engineering and AI-generated media can drastically reduce risks.

Action tip:

● Conduct quarterly workshops or simulations of voice/video-based scams.

● Encourage employees to report suspicious communication without fear of penalty.

Use AI-Based Deepfake Detection Tools

Just as AI creates deepfakes, AI can also help detect them. Modern detection tools analyze pixel-level inconsistencies, voice modulations, and metadata tampering to identify manipulated media.

Action tip:

● Integrate deepfake detection systems into your IT security workflow.

● Collaborate with cybersecurity vendors specializing in synthetic media analysis.

Develop a Formal Incident Response Plan

Even with precautions, deepfake incidents may occur. A well-defined Incident Response Plan (IRP) ensures quick containment, communication control, and recovery.

Action tip:

● Define clear escalation steps for suspected fake media.

● Involve legal, PR, and IT security teams in the response framework.

● Keep backups of critical video/audio communications for authenticity verification.

- Educate Leadership and Key Executives Executives and public figures are the most targeted individuals for deepfake scams due to their visibility and authority.

Action tip:

● Train leaders to recognize impersonation attempts.

● Limit public release of high-definition videos and conference recordings that can be exploited for cloning.

● Use watermarking or digital signature tools to authenticate official media releases.

Conclusion: Vigilance Is the New Normal

The deepfake era demands a shift in mindset—from trusting what we see to verifying what we receive. For individuals, it means protecting your digital identity with care and skepticism. For businesses, it requires structured policies, employee awareness, and the integration of AI-based security. In a world where technology can imitate reality, human judgment, verification, and education remain our strongest defenses. Staying informed and alert isn’t just cybersecurity—it’s digital survival.

Career Growth: Deepfake Defense and Ethical

Hacking

As deepfakes become more advanced and widespread, they are reshaping the cybersecurity landscape. The increasing use of AI-generated media in fraud, misinformation, and identity theft has created a strong demand for professionals skilled in deepfake detection, ethical hacking, and AI-driven security defense. Organizations across the world are now hiring specialists who understand both cybersecurity fundamentals and artificial intelligence technologies. The Growing Demand With deepfake-related crimes on the rise, industries like finance, government, media, and defense are investing heavily in AI security experts. These professionals are responsible for identifying manipulated media, preventing digital impersonation, and safeguarding communication systems from synthetic attacks. The need for such roles is not only urgent but also expanding rapidly, offering excellent career stability, high pay, and global opportunities. Key Career Paths and Skill Areas

Professionals entering this field can explore several specialized domains. Each requires a mix of technical expertise, analytical thinking, and ethical understanding.

- Cyber Forensics

Cyber forensics involves investigating and analyzing digital evidence to uncover cybercrimes. In the context of deepfakes, forensic experts focus on identifying manipulated audio, video, and image files.

Skills Required:

● Digital evidence analysis and metadata verification

- ● Understanding of deepfake detection tools and software

- ● Report documentation for legal or corporate use

- ● Familiarity with AI-generated content patterns

- Ethical Hacking

Ethical hackers simulate cyberattacks to find and fix vulnerabilities before criminals exploit them.

In this new age, they also focus on testing AI systems and voice/video authentication

- platforms for weaknesses.

- Skills Required:

- ● Penetration testing and vulnerability assessment

- ● Programming knowledge (Python, C++, JavaScript)

- ● Understanding of adversarial AI techniques

- ● Experience with cybersecurity tools like Kali Linux and Burp Suite

- Social Engineering Defense

Deepfakes are often used as part of social engineering scams to manipulate people emotionally or psychologically.

Professionals in this field design awareness programs and detection strategies to prevent human-targeted cyberattacks.

Skills Required:

● Knowledge of social manipulation and behavioral psychology

● Phishing simulation and response planning

● Training design for employees and organizations

● Use of AI-based behavioral monitoring tools

- AI Security

AI security specialists protect artificial intelligence systems from manipulation, bias, and

exploitation.

They ensure that machine learning models and algorithms remain secure against data tampering and fake input attacks.

Skills Required:

● Understanding of machine learning and neural networks

● Detection of adversarial AI attacks

● Securing data pipelines and AI deployment environments

● Knowledge of algorithm transparency and fairness

- Media Authenticity Verification

This emerging domain focuses on verifying whether videos, photos, or audio recordings are genuine or digitally altered. Experts work closely with news agencies, courts, and online platforms to combat

misinformation.Skills Required:

● Familiarity with AI-powered deepfake detection tools

● Use of blockchain for content traceability

● Digital watermarking and cryptographic verification

● Legal and ethical understanding of digital mediaIndustries Hiring in This Field The demand for experts in deepfake defense and ethical hacking extends across multiple sectors:

● Finance and Banking: To prevent voice-cloned fund transfer scams and identity frauds.

● Government and Defense: For counter-intelligence and media authenticity verification.

● Media and Journalism: To detect and remove manipulated visuals and fake news.

● Corporate Enterprises: To secure executive communication and protect brand reputation.

● Research and Education: To develop AI ethics, security models, and deepfake detection frameworks.

Educational Background and Certifications

To build a career in this domain, a solid foundation in computer science, cybersecurity, or artificial intelligence is essential.

Candidates may begin with degrees such as B.Tech, B.Sc., or diplomas in related fields, followed by specialized certifications. Recommended Certifications:

● CEH (Certified Ethical Hacker)

● CHFI (Computer Hacking Forensic Investigator)

● CompTIA Security+

● AI Security & Deepfake Detection Certifications (offered by Google, Microsoft, Coursera, etc.)

● Certified Deepfake Analyst (Emerging Programs)

Career Growth and Salary Outlook

Careers in deepfake defense and AI cybersecurity are among the fastest-growing and most rewarding in tech.

● Entry-Level Professionals: ₹6–12 LPA (e.g., cybersecurity analyst, junior ethical hacker)

● Mid-Level Experts: ₹15–30 LPA (e.g., AI security engineer, forensic investigator)

● Global Roles: $80,000–$200,000+ annually, especially in AI governance and digital forensics

As organizations prioritize digital authenticity, the demand for skilled professionals in these fields will continue to grow worldwide. The Future of Deepfake Defense Careers The battle against deepfakes is not just about technology — it’s about preserving truth and trust in the digital world. Professionals trained in deepfake defense, ethical hacking, and AI security will play a crucial role in protecting businesses, governments, and individuals from AI-driven deception. In short, the future belongs to those who combine technical intelligence with ethical responsibility — the next generation of cybersecurity heroes who will defend not just data, but digital reality itself.

7.Learn Ethical Hacking and Cyber Defense at Pinaki IT

Hub

In today’s fast-evolving digital world, cybersecurity is more than just a skill — it’s a responsibility. At Pinaki IT Hub, we believe in empowering students not just to understand technology but to protect the digital future from threats like deepfakes, cyber frauds, and AI-based manipulation. Our programs are designed to go far beyond classroom theory. We focus on real-world problem-solving, hands-on projects, and guided mentorship to help learners develop the expertise needed to thrive in the cybersecurity and ethical hacking domain. What Makes Pinaki IT Hub Different At Pinaki IT Hub, we bridge the gap between learning and practical defense. Each program is structured to simulate real cyber environments, giving students the confidence to tackle genuine security challenges.

Our training highlights include:

● Hands-on Penetration Testing and Cyber Forensics Sessions Students gain direct experience with professional hacking tools, security frameworks, and forensic analysis techniques. They learn how to identify vulnerabilities, assess risks, and strengthen system defenses effectively.

● Deepfake Detection and AI-Risk Assessment Training With the growing threat of AI-generated media, our learners explore the latest deepfake detection technologies, media authenticity verification, and ethical use of AI in cybersecurity.

● Real-World Cyber Incident Simulations Trainees participate in live simulation exercises that replicate actual cyberattack scenarios. These help build crisis response skills and prepare them to manage real incidents confidently and efficiently.

● Personal Mentorship from Industry Experts Every student receives guidance from experienced cybersecurity professionals who have worked across global industries. This mentorship ensures personalized learning, career advice, and real-world insights.

● Global Certification Preparation and Placement Support Our courses include preparation for international certifications such as CEH, CHFI, and CompTIA Security+. We also offer job placement support through our global network of industry connections.

Why Choose Pinaki IT Hub

At Pinaki IT Hub, learning is not confined to lectures. Students learn by doing — solving

real problems, securing live systems, and analyzing genuine cyber threats. Our goal is to create professionals who are not just job-ready but future-ready — capable of leading the next generation of ethical hackers, digital forensics experts, and AI security specialists.

When you train with Pinaki IT Hub, you don’t just learn cybersecurity —

Start your cybersecurity journey with Pinaki IT Hub and step into the future-ready cybersecurity workforce.

Powered by Knowledge. Driven by Purpose.